Every year CRDG’s team of evaluators produces evaluation studies for internal and external projects. But what most people don’t see is the thoughtful reflection that goes on “behind the scenes,” reflection that both informs their work and contributes to the larger body of knowledge on the theory, methods, and practices that make up the profession of evaluation.

Every year CRDG’s team of evaluators produces evaluation studies for internal and external projects. But what most people don’t see is the thoughtful reflection that goes on “behind the scenes,” reflection that both informs their work and contributes to the larger body of knowledge on the theory, methods, and practices that make up the profession of evaluation.

Following the release last year of Fundamental Issues in Evaluation, a major book CRDG’s Paul Brandon co-edited with Nick L. Smith of Syracuse University, Brandon has continued his research about the theory, methods, and practice of program evaluation with articles about the methods used in some of his recent evaluation projects. “The Inquiry Science Implementation Scale: Instrument Development and the Results of Validation Studies,” a collaboration with CRDG director Donald Young, evaluator Alice Taum, and science education researcher Frank Pottenger, Brandon discussed both the instrument developed and the uses of final data it produced for their recent project that looked at the scaling up of middle school science programs. Brandon also published an article in the American Journal of Evaluation with Malkeet Singh wherein they reviewed and critiqued the literature about the use of evaluation findings by evaluation clients such as program personnel, policy makers, and so forth. The article demonstrated that the research in this area was less comprehensive and less methodologically sound than many in the evaluation community have long believed.

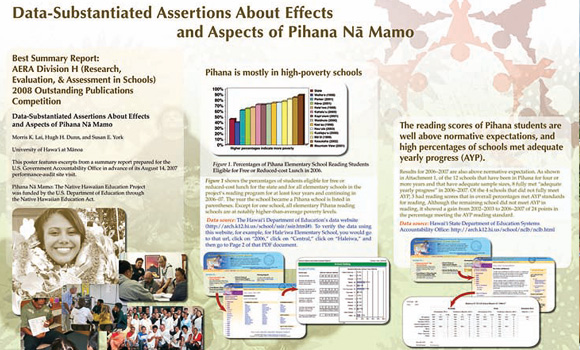

Morris Lai, longtime evaluator and principal investigator for the Pihana Nā Mamo Native Hawaiian Education Project, continued his work this year on indigenous approaches to evaluation. Lai is a member of the Evaluation Hui, a group of Māori and Kanaka Maoli (Native Hawaiian) evaluators working on the development and dissemination of methods appropriate for evaluations involving indigenous peoples. Lai’s presentations this year included papers entitled “Time to Stop Privileging the Ways of Cultural Dominators” and “Personal Reflections of Using Indigenous Traditional Cultural Values as a Teaching Strategy in Education” at the Pacific Circle Consortium conference in Taipei, Taiwan; a workshop entitled “Contextualizing Your Inner Evaluator: Embracing Other World Views” (together with Donna Murtons, Hazel Simonet, and Alice Kawakami) at the American Evaluation Association Annual Conference in Orlando, Florida; and a paper entitled “Empowerment, Collaborative, and Participatory Evaluation: Too Apologetic” with CRDG’s Sue York at the American Evaluation Association Annual Conference in Orlando, Florida.

Lai is also a member of the Native Hawaiian Education Council (NHEC), a group that is working to develop indicators for measuring the work done with grants made under the Native Hawaiian Education Act that are culturally and linguistically aligned with the community the grants are meant to serve. In line with these goals, Lai focuses on approaches to evaluation that honor and respect the world views of the target community and on evaluator-program relationships that encompass respect, trust, honor, and responsibility, all essential elements when considering culturally appropriate evaluations in Native Hawaiian and other indigenous communities.

Terry Higa’s work evaluating state- or federally-funded programs in Hawai’i Department of Education schools is based on this same concept of relationships as a key component that determines the success of the project. Her approach, based on the practical participatory evaluation method developed by Brad Cousins at the University of Ottawa, involves project staff in the project evaluation, including, if possible, the initial design. Higa sees her role as a bridge between the project and the funding agency, with her job being to design an evaluation with methods that project staff will understand and use, and that will provide funding agencies with the data they need. Regular contact with project staff helps to develop evaluation capacity in the project by continually increasing the project staff ‘s understanding about the links between the funding agency evaluation requirements, evaluation design, required evaluation data, and quality controls that are in place for the evaluation data. Higa reports that schools have expressed appreciation for this approach because it offers a cost-effective and highly useful evaluation while still respecting their communities and ways of doing things.

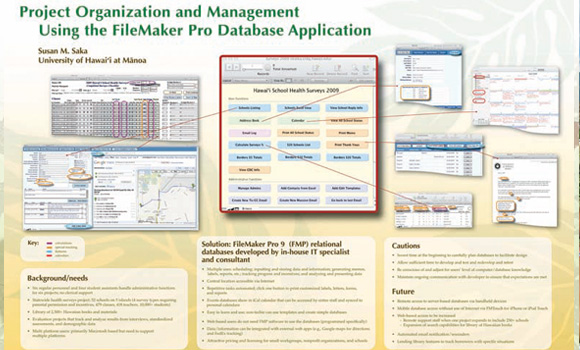

Susan Saka strives for this same level of partnership while working with large numbers of teachers and students. Drawing on her years of experience conducting the Hawai’i Youth Risk Behavior Surveys and the Hawai’i Youth Tobacco Survey for the Hawai’i Departments of Education and Health as part of a larger locally coordinated effort funded by the Hawai’i Department of Health and the Centers for Disease Control and Prevention (CDC), she has a long list of lessons learned that all come down to building strong relationships. In order to collect her data, buy-in from the teachers who have to make time in their classes is essential, especially as the focus on testing has taken so much of their time. She incorporates a combination of cultural aspects, personal incentives, and education about the value of the data to get that buy-in. She also makes it as easy as she can for teachers by being very organized, thanks to a FileMaker Pro database created for her projects by CRDG’s Derrick Okihara. Saka’s poster session at this year’s American Evaluation Association Annual Conference in Orlando, Florida highlighted that database, showing how it manages scheduling; stores data and information; generates memos, labels, and forms; tracks progress; analyzes data; and generates reports for a project involving 52 schools, over 500 teachers, and 10,000 students.